This blog post explores the latest advancements in Amazon Bedrock, showcasing how you can harness the power of custom models to build, experiment, and responsibly scale your generative AI applications.

Amazon Titan Text Embeddings V2 is a powerful text embeddings model optimised for Retrieval-Augmented Generation (RAG). This model offers a range of features, including flexible vector size options and improved unit vector normalisation, enabling you to strike the perfect balance between accuracy and efficiency. By leveraging the high-quality embeddings generated by Amazon Titan Text Embeddings V2, you can enhance the performance of your RAG-based applications, ensuring that your large language models (LLMs) have access to the most relevant information to provide accurate and on-point responses.

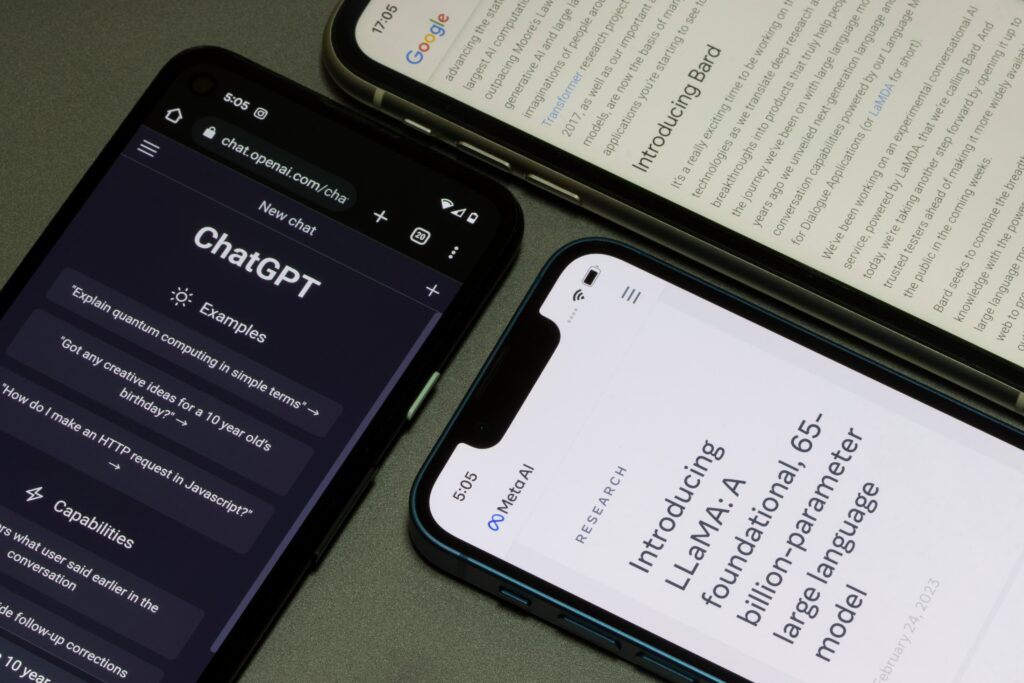

Next, we explore the arrival of Meta’s Llama 3 models in Amazon Bedrock. These state-of-the-art LLMs, available in 8B and 70B parameter sizes, have been trained on an impressive dataset of over 15 trillion tokens, including four times more code than the previous Llama 2 models. With significant improvements in reasoning, code generation, and instruction-following capabilities, the Llama 3 models offer a versatile foundation for a wide range of generative AI use cases, from content creation and conversational AI to language understanding and enterprise applications.

Finally, we delve into the groundbreaking introduction of custom model imports in Amazon Bedrock. This preview feature allows you to bring your own pre-trained models, such as fine-tuned versions of popular architectures like Llama 2, Llama 3, and Mistral, and seamlessly integrate them into your Amazon Bedrock-powered solutions. By leveraging your specialised models, you can tailor the performance and capabilities of your generative AI applications to your specific needs, leading to significant improvements in accuracy, relevance, and user experience.